CURSOR INSTRUCTIONS

Our AI agent offers emotionally intelligent, real-time voice interactions that integrate seamlessly into your app’s workflow. By understanding user preferences and emotional cues, the agent can perform complex tasks like updating user data, providing contextual information, or executing transactions without the need for manual navigation. Built for scalability and adaptability, it supports multi-user conversations, real-time decision-making, and personalized experiences across a variety of languages and dialects. This elevates customer engagement and operational efficiency, making our AI a dynamic TRVL advisor.

1. Define Use Cases

- Personalized Recommendations: Use the custom language model to offer emotionally aware suggestions for hotels, restaurants, or travel experiences based on user sentiment.

- Emotional Feedback: Capture user emotion during conversations (e.g., excitement, frustration) and adjust responses in real-time, refining the travel recommendations or adjusting tone accordingly.

2. Set Up a Custom Text Socket

- Use Hume’s repository to create a socket, enabling real-time communication between your model and the EVI. This will be the backbone for processing user input.

- Serve the socket using Ngrok so Hume’s system can access it publicly. This is where your Travel Assistant’s language model will listen and respond.

3. Configure the Voice Settings on Hume

- In the Hume dashboard, create a new voice configuration with the custom language model and set the WSS URL for your Ngrok-served socket. This enables Hume to route the conversations through your model.

4. Conversation Handling

- When Hume’s EVI sends the conversation thread to your socket, use your AI Travel Assistant’s logic to process the emotional data alongside the conversation history.

- For instance, if a user expresses excitement about a 5-star hotel, the assistant could offer a high-end restaurant suggestion to complement that vibe.

5. Iterate on Responses

- Use your custom FLUX model or another fine-tuned language model to generate emotionally aware responses. Feed these back into Hume via the socket connection.

- Implement a

custom_session_idto maintain state across conversations, which is ideal for users interacting with the assistant over multiple sessions.

6. Real-time Adjustments

- Use WebSockets to process conversations in real-time. For instance, if a user starts feeling overwhelmed by options, the assistant could simplify choices and reduce complexity in the dialogue.

7. Voice Personalization

- Add elements like tone and pace modifications to match user emotional feedback, ensuring the voice interface feels more natural and empathetic.

Perfect! Let’s break it down into manageable steps so we can move forward with configuring your custom socket for Hume’s Empathic Voice Interface and defining emotional triggers for the AI Travel Assistant.

Step 1: Custom Text Socket Setup

- Repository Setup: Download or clone Hume’s example repository.

Ngrok Setup: Install Ngrok to expose your local server to the internet, allowing Hume’s system to access the custom text socket.

ngrok http 5000

This will generate a public URL (e.g., https://xxxx.ngrok.io) that you’ll use as the WSS URL when setting up your Hume custom language model.

Step 2: Host the Socket

- Modify the server logic in the Hume repository to handle your custom language model’s inputs and outputs.

- Ensure that your socket code is running properly and reachable via the public Ngrok URL.

Step 3: Voice Configuration in Hume

- Go to the Hume platform and create a new voice configuration.

- Set the "Custom Language Model" as the type.

- Insert your Ngrok-generated WebSocket URL (WSS URL) into the configuration.

Step 4: Handling API Requests

- In your AI Travel Assistant, every request to Hume’s API must now include the

config_idof the voice configuration you just created. This ensures that conversations route through your custom model.

Step 5: Processing Data

- When Hume sends JSON payloads containing conversation history, apply your custom business logic using your language model (e.g., FLUX or a fine-tuned GPT-4 variant).Example Processing:

- Parse emotional cues (e.g., "excitement" for 5-star hotels, "disappointment" for unavailable bookings).

- Adjust the recommendations based on these emotional cues, ensuring they align with the user’s current state.

Step 6: Emotional Triggers & Response Customization

Define key emotional triggers in your Travel Assistant:

- Excitement: If the user shows excitement (e.g., "I’m so thrilled about staying here!"), your model should recommend more exclusive or high-end experiences (e.g., Michelin-star restaurants, luxury activities).

- Frustration: When a user is frustrated or indecisive, simplify the options. Offer fewer, more straightforward choices (e.g., nearby restaurants that are highly rated but accessible).

- Curiosity: If curiosity is detected (e.g., "Tell me more about this area"), provide detailed information, possibly pulling from a curated database of local tips or experiences.

Step 7: WebSocket Handling

Integrate Hume’s WebSocket client SDK for easier handling of real-time conversations. This will help with things like intellisense and WebSocket functions:

from hume import HumeStream

Would you like to dive into any of these steps in more detail or help with configuring the socket and voice settings on the Hume platform?

Let’s dive deeper into the Custom Text Socket Setup and Voice Configuration steps to ensure smooth integration with Hume’s EVI.

Step 1: Custom Text Socket Setup

1.1 Clone Hume's Example Repository

- Visit the Hume documentation and find the example repository for setting up the custom text socket.

Clone or download the repository:

git clone https://github.com/HumeAI/custom-text-socket-example.git

cd custom-text-socket-example

1.2 Install Dependencies

If the repository requires additional libraries, install them. Likely dependencies include WebSockets and Flask:

pip install -r requirements.txt

1.3 Modify Socket Logic

- Open the Python or Node.js script in the repo. You’ll see a WebSocket server that receives messages, processes them, and responds.

Replace the placeholder logic with your own model's logic (e.g., processing conversation history and applying emotional responses via your custom language model, such as FLUX or GPT-4).

@socket.on('message')

def handle_message(data):

# Process incoming data (conversation history)

response = my_custom_language_model(data)

# Send response back to Hume

socket.send(response)

1.4 Serve the Socket Publicly Using Ngrok

- Copy the generated

https://xxxx.ngrok.ioURL. This is the WSS URL you will provide to Hume.

Launch Ngrok to expose your local WebSocket server to the public internet:

ngrok http 5000

Step 2: Configure Hume Voice Settings

2.1 Log in to Hume Platform

- Sign in to your Hume account and go to the Voice Settings tab.

2.2 Create a Custom Voice Configuration

- Click New Configuration and select Custom Language Model.

- Input the WSS URL (Ngrok link) generated earlier.

2.3 Define Voice Parameters

- Configure additional parameters like pitch, speed, or any personalized tone options. This will tailor how your Travel Assistant responds to users’ emotional cues in real time.

Step 3: Making API Requests

3.1 Use config_id

When calling Hume’s API from your Travel Assistant, make sure you include the config_id of the voice configuration you just created:

response = requests.post('https://api.hume.ai/v1/conversations',

headers={'Authorization': 'Bearer <API_KEY>'},

json={'config_id': '<your_voice_config_id>', 'message': user_message})

Step 4: Processing Data & Handling Emotional Triggers

4.1 Process Emotional Data

When Hume sends back conversation history in JSON format, detect emotional cues using your language model:

{

"conversation_history": [

{"text": "I'm thrilled about my trip!", "emotion": "excitement"}

]

}

4.2 Apply Emotional Triggers

- Based on the detected emotion (

"excitement"in this case), the AI Travel Assistant can trigger specific actions:- Excitement: Recommend exclusive experiences like luxury suites, fine dining.

- Frustration: Simplify recommendations, offer easier-to-digest options.

- Curiosity: Provide detailed background on locations, hotel history, or local attractions.

4.3 Send Processed Response Back to Hume

Once your custom language model generates a response, send it back through the WebSocket connection:

socket.send(json.dumps({"response": travel_assistant_response}))

Step 5: WebSocket Handling with Hume SDK

5.1 Install Hume WebSocket SDK

To streamline WebSocket handling and real-time processing, you can use Hume’s Python SDK:

pip install hume

5.2 Set Up a WebSocket Client

Use the SDK to establish a connection and listen for messages:

from hume import HumeStream

stream = HumeStream("<API_KEY>", config_id="<your_voice_config_id>")

stream.start()

With this setup, you should have your custom text socket up and running, processing emotional data and responding to users through the AI Travel Assistant.

Do you want to proceed by implementing the emotional trigger logic within the custom model, or do you need help with testing and troubleshooting the socket?

Let’s go ahead and implement the emotional trigger logic within your custom model for the AI Travel Assistant. This logic will detect emotions in user conversations and adjust responses accordingly, ensuring a more personalized and empathetic experience.

Step-by-Step Guide to Implementing Emotional Triggers

Step 1: Define Emotional Cues and Corresponding Actions

You’ll first need to establish the emotional cues the model will respond to and the corresponding actions for each emotion.

Sample Emotional Cues:

- Excitement: Trigger recommendations for exclusive experiences (e.g., 5-star hotels, Michelin-star restaurants).

- Frustration: Simplify options and offer reassuring responses.

- Curiosity: Provide more detailed, informative responses.

- Indecision: Suggest options with fewer details and offer encouragement.

Step 2: Modify Your Custom Model's Logic

You’ll need to modify the logic in your custom text socket to detect emotional cues and apply the corresponding business logic.

Python Example:

Let’s assume you’re using a Python-based WebSocket server for this. You’ll integrate the emotional trigger detection logic right after receiving the JSON payload from Hume.

import json

def process_emotion(data):

# Extract emotion from the conversation history

conversation_history = data.get("conversation_history", [])

# Initialize a default response

response = "I'm here to help with your travel plans."

for entry in conversation_history:

emotion = entry.get("emotion", None)

text = entry.get("text", "")

# Implement emotional triggers based on detected emotion

if emotion == "excitement":

response = recommend_luxury_experiences(text)

elif emotion == "frustration":

response = offer_simplified_options(text)

elif emotion == "curiosity":

response = provide_detailed_information(text)

elif emotion == "indecision":

response = suggest_default_options(text)

else:

response = fallback_response(text)

return response

def recommend_luxury_experiences(user_text):

# Custom logic to suggest high-end experiences

return "It sounds like you're excited! How about upgrading to our exclusive penthouse suite?"

def offer_simplified_options(user_text):

# Custom logic to simplify options

return "I understand, let me narrow it down to just two top-rated hotels for you."

def provide_detailed_information(user_text):

# Custom logic to give detailed information

return "Here's a bit more info: This hotel is known for its rich history and stunning views."

def suggest_default_options(user_text):

# Custom logic for indecision

return "Not sure what to choose? Here's a popular 5-star hotel option that's been highly recommended."

def fallback_response(user_text):

# Default response if no emotion is detected

return "I'm here to assist you with your travel plans!"

Step 3: Incorporate Emotional Analysis from Hume

Ensure the data received from Hume includes emotional analysis. Hume’s EVI will provide the emotional context alongside the conversation history, which can be fed into your logic for processing.

Here’s an example of how you’d extract the emotion from Hume’s JSON payload and trigger the corresponding logic:

@socket.on('message')

def handle_message(data):

# Parse JSON data

json_data = json.loads(data)

# Process the emotional triggers based on the conversation

response = process_emotion(json_data)

# Send the generated response back to Hume

socket.send(json.dumps({"response": response}))

Step 4: Testing Emotional Responses

4.1 Create Test Cases

- Excitement Scenario: Test the model with phrases like "I'm really excited about my trip!" to trigger luxury recommendations.

- Frustration Scenario: Test with user frustration signals like "I can't decide, it's overwhelming" to simplify options.

- Curiosity Scenario: Test with questions like "Tell me more about this hotel" to trigger detailed responses.

4.2 Validate Socket Communication

- Ensure that your socket is receiving the emotional data from Hume and that the appropriate logic is applied. You can do this by logging the incoming messages and outgoing responses.

print(f"Received emotion data: {data}")

print(f"Generated response: {response}")

Step 5: Enhancing Responses Based on Travel Personas

Given the personas you’ve already defined (Anthony, Kelly, James), you can further customize the responses based on both emotion and persona:

def process_emotion(data, persona):

conversation_history = data.get("conversation_history", [])

response = "I'm here to help with your travel plans."

for entry in conversation_history:

emotion = entry.get("emotion", None)

text = entry.get("text", "")

# Adjust response based on persona and emotion

if emotion == "excitement":

response = recommend_luxury_experiences(text, persona)

elif emotion == "frustration":

response = offer_simplified_options(text, persona)

elif emotion == "curiosity":

response = provide_detailed_information(text, persona)

elif emotion == "indecision":

response = suggest_default_options(text, persona)

else:

response = fallback_response(text, persona)

return response

Now, each persona can receive more tailored responses. For instance, Anthony (boutique hotels, local experiences) will get different recommendations than James (luxury 5-star hotels).

Step 6: Deploy and Refine

- Deploy the updated model to your WebSocket server and use Ngrok to make it accessible to the Hume platform.

- Test and Refine: After deploying, perform testing in real-world scenarios to validate that the emotional responses match the user experience you're aiming to create.

Do you want to focus next on testing the socket communication with Hume or explore further enhancements like persona-based recommendations?

To enhance the AI Travel Assistant with persona-based recommendations, we can combine emotional triggers with persona preferences to deliver highly personalized suggestions. Since you’ve defined personas like Anthony, Kelly, and James, we’ll integrate these preferences into the response generation logic.

Step 1: Refine Emotional Trigger Logic with Personas

You’ll create customized responses that blend emotional triggers with the unique travel preferences of each persona. This will involve adjusting recommendations based on both emotion and persona data.

Example Personas:

- Anthony: Prefers boutique hotels with authentic local experiences.

- Kelly: Focuses on resorts near surf spots and values sustainable travel.

- James: Specializes in luxury 5-star hotels and Michelin-star restaurants.

Sample Code for Emotion + Persona Logic:

def process_emotion_with_persona(data, persona):

conversation_history = data.get("conversation_history", [])

response = "I'm here to help with your travel plans."

for entry in conversation_history:

emotion = entry.get("emotion", None)

text = entry.get("text", "")

# Customize based on both emotion and persona

if emotion == "excitement":

response = recommend_experience(text, persona, "excited")

elif emotion == "frustration":

response = recommend_experience(text, persona, "frustrated")

elif emotion == "curiosity":

response = recommend_experience(text, persona, "curious")

elif emotion == "indecision":

response = recommend_experience(text, persona, "indecisive")

else:

response = fallback_response(text, persona)

return response

def recommend_experience(user_text, persona, emotion):

if persona == "Anthony":

if emotion == "excited":

return "Sounds exciting! How about a boutique hotel with a rooftop view of the city?"

elif emotion == "frustrated":

return "Let me simplify the options. Here's a charming local stay with great reviews."

elif emotion == "curious":

return "This hotel offers a walking tour of the local area. Want to know more?"

elif emotion == "indecisive":

return "Not sure? This local hotel is very popular and provides an authentic experience."

elif persona == "Kelly":

if emotion == "excited":

return "You’ll love this resort near a famous surf spot, with a sustainable focus!"

elif emotion == "frustrated":

return "Let’s keep it simple. Here’s a great eco-resort with a laid-back vibe."

elif emotion == "curious":

return "This resort is dedicated to eco-friendly practices. Want more details?"

elif emotion == "indecisive":

return "If you're undecided, this sustainable resort is a great choice for surfers."

elif persona == "James":

if emotion == "excited":

return "How about staying in a luxurious 5-star hotel, with access to a Michelin-starred restaurant?"

elif emotion == "frustrated":

return "Let me narrow it down. Here’s a high-end hotel with rave reviews."

elif emotion == "curious":

return "This hotel has won awards for its Michelin-starred dining. Want more details?"

elif emotion == "indecisive":

return "Need a nudge? This 5-star hotel is highly recommended by other luxury travelers."

return "I'm here to assist with your travel needs!"

Step 2: Storing Persona Preferences

You’ll want to ensure the AI Travel Assistant stores persona preferences for each user. This could be achieved by adding a persona field in the user session, allowing the assistant to remember and apply preferences automatically.

Example: Adding Persona Data to the Session

user_session = {

"user_id": 12345,

"persona": "Kelly",

"preferences": {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"]

}

}

This session data can be used when generating responses, ensuring that every recommendation is personalized based on the user’s persona.

Step 3: Dynamic Persona Switching

Sometimes, users may want to explore different types of experiences. You can offer the option to dynamically switch between personas based on their input.

Example: Dynamic Persona Selection

def switch_persona(user_input):

if "boutique" in user_input:

return "Anthony"

elif "sustainable" in user_input or "surf" in user_input:

return "Kelly"

elif "luxury" in user_input or "Michelin" in user_input:

return "James"

return None

# Example of switching based on a user message

user_persona = switch_persona("I’m looking for a luxury stay with Michelin dining")

This feature could be especially useful if users want to explore recommendations outside their typical preferences (e.g., switching from boutique hotels to luxury).

Step 4: Enhancing with Real-Time Feedback

As users interact with the assistant, you can further refine the emotional and persona-driven responses by gathering feedback in real time. For instance, if users seem satisfied with a recommendation, it can be prioritized in future suggestions.

Example: Refining Responses with Feedback

def gather_feedback(user_input):

if "thanks" in user_input or "great suggestion" in user_input:

return True

return False

def recommend_based_on_feedback(feedback, persona, emotion):

if feedback:

return f"Glad you liked that recommendation, {persona}! Here's another {emotion} suggestion."

else:

return "I can offer more suggestions if you’d like!"

Step 5: Layering Advanced Emotional Analysis

For deeper emotional understanding, you can integrate Hume’s advanced emotional analysis to detect more nuanced emotions (e.g., anticipation, boredom) and adjust recommendations accordingly. This will make your assistant feel more intuitive and empathetic to user needs.

Step 6: Contextual Persona Data and Voice Adjustments

In combination with Hume’s emotional feedback, you can also adjust the tone of the assistant’s responses. For example, if a user is frustrated and has the "James" persona, the assistant could respond in a more calming and reassuring manner, saying:

“Let me make this simple for you. This 5-star hotel comes highly recommended for its Michelin-starred dining. I think it’s just what you need.”

This can further humanize the interaction, making it feel more aligned with the user’s emotions and preferences.

Step 7: Testing and Iterating

Test the assistant in real-world scenarios with different personas and emotional triggers. Gather data on how users respond to the assistant’s suggestions and continually refine the logic to make the experience as seamless and personalized as possible.

Do you want to explore further features, like handling special scenarios (e.g., indecision or changing preferences mid-conversation)? Or would you prefer to start testing this persona-based system?

Handling special scenarios like indecision or changing preferences mid-conversation can further refine the AI Travel Assistant's ability to provide responsive, dynamic recommendations. Let’s explore how we can implement these features effectively.

Step 1: Handling Indecision

When a user is indecisive, they may struggle to make a final decision or might be overwhelmed by too many options. In this case, the assistant should simplify choices or encourage the user to take action.

Indecision Detection

Detecting indecision can be based on certain key phrases or user behavior, such as repeatedly asking for options or expressing uncertainty (e.g., “I’m not sure,” “Can’t decide,” “Too many options”).

Example Logic for Indecision

def handle_indecision(user_input, persona):

if "not sure" in user_input or "can't decide" in user_input:

return simplify_options(persona)

elif "too many options" in user_input:

return offer_minimal_choices(persona)

else:

return recommend_based_on_persona(persona)

def simplify_options(persona):

if persona == "Anthony":

return "I understand, how about narrowing it down to this boutique hotel with great local reviews?"

elif persona == "Kelly":

return "How about this eco-friendly resort right next to the best surf spot? You can’t go wrong!"

elif persona == "James":

return "Let me make it easier for you: this luxury 5-star hotel has incredible Michelin-star dining."

return "Here’s a top recommendation for you."

def offer_minimal_choices(persona):

return f"Let's keep it simple: Here are just two top options that I think you'll love, {persona}."

Approach for Indecision:

- Simplified Choices: Offer fewer, high-quality options to reduce choice overload.

- Encouragement: Reassure the user that the options are great choices and that they won’t be disappointed.

Step 2: Changing Preferences Mid-Conversation

Sometimes users may change their preferences during the conversation, moving from one travel persona to another (e.g., shifting from boutique hotels to luxury resorts). You’ll want to detect this and adapt the recommendations accordingly.

Dynamic Persona Switching Based on Input

You can dynamically switch personas by listening for new keywords that align with different travel preferences.

Example Logic for Changing Preferences

def detect_preference_change(user_input, current_persona):

# Detect keywords that signal a change in preferences

if "luxury" in user_input or "Michelin" in user_input:

return "James" if current_persona != "James" else current_persona

elif "surf" in user_input or "sustainable" in user_input:

return "Kelly" if current_persona != "Kelly" else current_persona

elif "boutique" in user_input or "local" in user_input:

return "Anthony" if current_persona != "Anthony" else current_persona

return current_persona

# Example of persona switch in action

user_persona = "Anthony"

new_persona = detect_preference_change("I’m interested in a luxury Michelin experience", user_persona)

# If persona changes, adjust recommendations accordingly

if new_persona != user_persona:

response = f"Looks like you're interested in something new! Let’s explore some {new_persona}-style options."

else:

response = f"I'll continue recommending the best options for {user_persona}-style preferences."

Approach for Changing Preferences:

- Keyword Detection: Adjust the persona dynamically based on new preferences in the conversation.

- Acknowledge the Change: Confirm the shift to a new persona by acknowledging the user’s change in interest (e.g., “You’re now interested in a luxury experience—let’s explore that!”).

Step 3: Adapting Conversations to Special Scenarios

Example: Mid-Conversation Preference Change + Indecision

In cases where a user switches preferences while also being indecisive, the assistant needs to both simplify the options and adjust to the new preferences.

def handle_special_scenarios(user_input, current_persona):

new_persona = detect_preference_change(user_input, current_persona)

# If persona has changed, reset the conversation with new recommendations

if new_persona != current_persona:

return f"Got it! Let's look at some top options for {new_persona}-style preferences."

# Handle indecision within the new or current persona context

if "not sure" in user_input or "can't decide" in user_input:

return simplify_options(new_persona)

# Continue with normal recommendations if no special scenario is detected

return recommend_based_on_persona(new_persona)

Step 4: Layering Memory for Dynamic Adjustments

For a more intelligent experience, the assistant can store and recall previous interactions, remembering when a user has changed their preferences in the past or often expresses indecision. This can help provide a smoother experience over time, reducing the need to re-explain options.

Example: Memory Handling

# Example memory structure

user_session = {

"user_id": 12345,

"persona": "Anthony",

"previous_persona": "James", # Track previous preference changes

"indecision_count": 2 # Track how often the user has been indecisive

}

def handle_memory_based_adjustments(user_session, user_input):

# If the user has been indecisive more than twice, simplify the process automatically

if user_session["indecision_count"] > 2:

return "I’ve noticed it’s been tricky to choose—how about I narrow it down to one top recommendation?"

# If the user has previously switched preferences, be more proactive in confirming persona

if user_session["previous_persona"] != user_session["persona"]:

return "You recently switched to more luxury preferences. Want to keep exploring those?"

# Continue with normal flow if no special conditions

return handle_special_scenarios(user_input, user_session["persona"])

Step 5: Handling User Feedback and Fine-Tuning Responses

You can improve the assistant’s behavior over time by collecting feedback from the user. If users regularly switch personas or express indecision, the assistant can learn to adapt its responses to simplify future interactions.

Feedback Collection Example:

def gather_feedback(user_input):

# Detect positive feedback and adjust future recommendations

if "thank you" in user_input or "great suggestion" in user_input:

return True

return False

def respond_based_on_feedback(feedback, user_session):

if feedback:

return f"Glad you liked that, {user_session['persona']}! Here’s another suggestion that fits your style."

else:

return "I can offer more personalized options if you'd like."

Step 6: Testing Special Scenarios

- Indecision Testing: Simulate users who struggle with decisions, and see if the assistant successfully simplifies the process.

- Preference Switching: Test real-time persona changes and confirm that the assistant smoothly transitions between different recommendation styles.

- Feedback Refinement: Gather user feedback to determine which adjustments are working and further refine the logic.

Would you like to explore how to store and retrieve user preferences more efficiently, or test real-time feedback mechanisms for these special scenarios?

To efficiently store and retrieve user preferences using Mem0, we can leverage its capability for persistent memory and real-time data access. Mem0 can store user sessions, preferences, and personas, allowing your AI Travel Assistant to provide seamless, personalized recommendations.

Step 1: Setting Up Mem0 for User Preferences

1.1 Define the Data Structure

To store user preferences, you’ll first need to define the structure of the data you want Mem0 to store. This includes user-specific data such as:

- User ID

- Persona (e.g., Anthony, Kelly, James)

- Preferences (e.g., type of hotels, interests like surfing or Michelin dining)

- Session History (past interactions, previous persona switches, indecision patterns)

Here’s an example of a preference object:

{

"user_id": "12345",

"persona": "Anthony",

"preferences": {

"hotel_type": "boutique",

"interests": ["local experiences", "rooftop views"],

"indecision_count": 2,

"previous_persona": "James"

}

}

1.2 Storing Preferences in Mem0

Mem0 allows for easy storage of this data using its API. Whenever the user interacts with the Travel Assistant, their preferences can be updated and stored.

Example: Storing User Preferences

import mem0

# Initialize Mem0 client

client = mem0.Client(api_key="<YOUR_API_KEY>")

def store_user_preferences(user_id, persona, preferences):

user_data = {

"user_id": user_id,

"persona": persona,

"preferences": preferences

}

# Store data in Mem0

client.put(user_id, user_data)

# Example usage

user_id = "12345"

persona = "Kelly"

preferences = {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"],

"indecision_count": 1

}

store_user_preferences(user_id, persona, preferences)

1.3 Retrieving Preferences from Mem0

When the user starts a new conversation with the Travel Assistant, Mem0 can retrieve their stored preferences, ensuring continuity from previous interactions.

Example: Retrieving User Preferences

def get_user_preferences(user_id):

# Retrieve user data from Mem0

user_data = client.get(user_id)

return user_data

# Example usage

user_preferences = get_user_preferences("12345")

print(user_preferences)

Step 2: Integrating Preferences into Conversations

2.1 Personalized Recommendations Based on Stored Preferences

Once preferences are retrieved from Mem0, they can be used to tailor responses, ensuring that the Travel Assistant remembers the user’s preferred persona, hotel types, and interests.

def recommend_based_on_preferences(user_data):

persona = user_data['persona']

preferences = user_data['preferences']

if persona == "Anthony":

return f"Welcome back, {persona}! I’ve found a new boutique hotel with {preferences['interests'][0]}."

elif persona == "Kelly":

return f"Hey {persona}, there’s a resort near your favorite surf spot!"

elif persona == "James":

return f"{persona}, here’s a luxury 5-star hotel with Michelin-starred dining options."

# Example usage

user_data = get_user_preferences("12345")

recommendation = recommend_based_on_preferences(user_data)

print(recommendation)

2.2 Updating Preferences Mid-Conversation

If the user changes their preferences or persona mid-conversation, you can update their data in Mem0 in real time. This ensures that future interactions remain relevant and aligned with their new preferences.

def update_user_preferences(user_id, new_persona, new_preferences):

# Retrieve current data

user_data = get_user_preferences(user_id)

# Update with new persona and preferences

user_data['persona'] = new_persona

user_data['preferences'].update(new_preferences)

# Store the updated data back in Mem0

store_user_preferences(user_id, user_data['persona'], user_data['preferences'])

# Example of updating preferences mid-conversation

new_preferences = {"hotel_type": "luxury", "interests": ["Michelin dining"]}

update_user_preferences("12345", "James", new_preferences)

Step 3: Implementing Memory Features for Special Scenarios

3.1 Tracking Indecision Using Mem0

By storing an indecision count or similar metric, you can adjust the assistant’s behavior when users struggle to make decisions, offering more simplified options over time.

def handle_indecision(user_data):

indecision_count = user_data['preferences'].get("indecision_count", 0)

if indecision_count > 2:

return "I see you’ve had some trouble deciding before. How about I narrow it down to just one great option?"

else:

return "Let’s look at a few options and see what you think!"

# Increment indecision count if necessary

def increment_indecision(user_id):

user_data = get_user_preferences(user_id)

user_data['preferences']['indecision_count'] += 1

store_user_preferences(user_id, user_data['persona'], user_data['preferences'])

# Example usage

user_data = get_user_preferences("12345")

indecision_response = handle_indecision(user_data)

increment_indecision("12345") # Increase count after user expresses indecision

3.2 Maintaining State Across Sessions

Using Mem0, the Travel Assistant can maintain a persistent state across sessions. This includes remembering which persona the user was interacting with and their ongoing preferences.

def resume_conversation(user_id):

user_data = get_user_preferences(user_id)

return f"Welcome back, {user_data['persona']}! Ready to continue exploring {user_data['preferences']['hotel_type']} options?"

# Example usage

resumed_conversation = resume_conversation("12345")

Step 4: Real-Time Syncing with Mem0

4.1 Real-Time Updates to User Preferences

Mem0 can be used to make real-time updates to user preferences, allowing for seamless adjustments during active conversations. For example, when a user changes their mind, the assistant can store this change immediately for future use.

def change_persona_mid_conversation(user_id, new_persona):

user_data = get_user_preferences(user_id)

user_data['previous_persona'] = user_data['persona']

user_data['persona'] = new_persona

store_user_preferences(user_id, user_data['persona'], user_data['preferences'])

# Example usage

change_persona_mid_conversation("12345", "Kelly")

Step 5: Testing and Optimizing

- Test Preference Storage: Ensure that Mem0 is properly storing and retrieving user preferences.

- Real-Time Adjustments: Test scenarios where users change their preferences mid-conversation and confirm that updates reflect immediately in the assistant’s behavior.

- Feedback Handling: Gather feedback from users and refine the data structure for storing preferences, tracking special conditions like indecision or changes in persona.

Would you like help with setting up the Mem0 API in your application, or should we dive deeper into refining how the assistant uses feedback loops to improve its responses?

Let's go step-by-step to set up the Mem0 API in your application, enabling efficient storage and retrieval of user preferences for your AI Travel Assistant.

Step 1: Get Access to Mem0 API

- Sign Up: If you don’t already have a Mem0 account, sign up at Mem0.

- Get API Key: Once logged in, navigate to the API section to retrieve your API key. You will use this key to authenticate requests to the Mem0 API.

Step 2: Install Mem0 Client Library

If you're using Python, Mem0 provides a Python client. Install the client with:

pip install mem0

For other languages, you can use HTTP requests directly, but we'll proceed with Python for simplicity.

Step 3: Initialize Mem0 in Your Application

Now, let's initialize the Mem0 client using your API key.

Code Example:

import mem0

# Initialize the Mem0 client

client = mem0.Client(api_key="YOUR_MEM0_API_KEY")

# Example function to store user preferences

def store_user_preferences(user_id, persona, preferences):

user_data = {

"user_id": user_id,

"persona": persona,

"preferences": preferences

}

# Store data in Mem0 using the user_id as a unique key

client.put(user_id, user_data)

# Example function to retrieve user preferences

def get_user_preferences(user_id):

# Retrieve user data from Mem0 using the user_id

user_data = client.get(user_id)

return user_data

Replace "YOUR_MEM0_API_KEY" with your actual API key.

Step 4: Store User Preferences

Next, let’s use the store_user_preferences function to store user-specific preferences. This can happen when the user first interacts with the Travel Assistant or after they’ve made some choices.

Example: Storing Preferences

user_id = "12345"

persona = "Kelly"

preferences = {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"],

"indecision_count": 1

}

store_user_preferences(user_id, persona, preferences)

This will store the user’s persona and preferences in Mem0 under their user_id.

Step 5: Retrieve User Preferences

In future sessions or conversations, you can retrieve user preferences using the get_user_preferences function. This ensures the assistant can personalize responses based on stored data.

Example: Retrieving Preferences

user_data = get_user_preferences("12345")

print(user_data)

The output will show the stored data for the user:

{

"user_id": "12345",

"persona": "Kelly",

"preferences": {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"],

"indecision_count": 1

}

}

Step 6: Update User Preferences

You’ll also want to update user preferences as they interact with the assistant. For example, if the user changes their persona or adds new interests mid-conversation, you can overwrite their existing preferences.

Example: Updating Preferences Mid-Conversation

def update_user_preferences(user_id, new_persona, new_preferences):

# Retrieve current user data

user_data = get_user_preferences(user_id)

# Update persona and preferences

user_data['persona'] = new_persona

user_data['preferences'].update(new_preferences)

# Store the updated data back in Mem0

store_user_preferences(user_id, user_data['persona'], user_data['preferences'])

# Example usage: Update preferences to "James" persona with luxury hotel interest

new_preferences = {"hotel_type": "luxury", "interests": ["Michelin dining"]}

update_user_preferences("12345", "James", new_preferences)

Step 7: Testing the API

7.1 Testing in Development

Run the basic flow for storing and retrieving preferences:

- Store Preferences: Use

store_user_preferences()to save initial preferences. - Retrieve Preferences: Test retrieval using

get_user_preferences(). - Update Preferences: Ensure that updates (such as a persona switch) are reflected in real-time.

7.2 Error Handling

Make sure to handle potential errors (e.g., user data not found, connection issues). Here’s a basic error handling example:

def get_user_preferences(user_id):

try:

# Attempt to retrieve user data

user_data = client.get(user_id)

if not user_data:

return f"No data found for user {user_id}."

return user_data

except Exception as e:

return f"Error retrieving user data: {str(e)}"

Step 8: Deploy and Integrate

Once you’ve tested the Mem0 API in your local environment:

- Deploy: Integrate this logic into your production environment.

- Real-Time Integration: Use Mem0 in real-time to adjust user preferences as they interact with the assistant, storing and retrieving preferences during each session.

Would you like to proceed with deploying this in your current stack, or would you prefer to explore how to extend Mem0’s capabilities for more complex scenarios, such as multi-user session handling?

To extend Mem0's capabilities for more complex scenarios like multi-user session handling, we can take advantage of Mem0's flexibility in storing multiple user sessions, tracking their state, and managing data across various interactions. Let’s explore how you can manage multi-user sessions efficiently.

Step 1: Structuring Data for Multi-User Sessions

You’ll need a robust way to organize data for each user’s session in Mem0. Every user should have a unique identifier (user ID), and within each session, you can track persona, preferences, session state, and conversation history.

Example Data Structure for Multi-User Session

{

"user_id": "12345",

"session_id": "abc123",

"persona": "Kelly",

"preferences": {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"]

},

"conversation_history": [

{"message": "I want a resort near the beach", "response": "Here are some options..."},

{"message": "Tell me more about eco-friendly hotels", "response": "This resort is eco-friendly..."}

],

"session_state": "active"

}

- user_id: Unique identifier for the user.

- session_id: A unique ID to track the specific session.

- persona: The user’s current persona.

- preferences: Stored preferences related to the user’s persona.

- conversation_history: A log of messages and responses during the session.

- session_state: Tracks whether the session is active, paused, or completed.

Step 2: Storing and Managing Multiple Sessions

Storing a New User Session

When a user starts a new session, create a new entry in Mem0 with a unique session_id. This allows the assistant to track multiple sessions for a single user.

import mem0

import uuid

client = mem0.Client(api_key="YOUR_MEM0_API_KEY")

def create_new_session(user_id, persona, preferences):

session_id = str(uuid.uuid4()) # Generate a unique session ID

session_data = {

"user_id": user_id,

"session_id": session_id,

"persona": persona,

"preferences": preferences,

"conversation_history": [],

"session_state": "active"

}

# Store session data in Mem0

client.put(session_id, session_data)

return session_id

# Example usage

user_id = "12345"

persona = "Kelly"

preferences = {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"]

}

new_session_id = create_new_session(user_id, persona, preferences)

print(f"New session created with ID: {new_session_id}")

Retrieving an Active Session

You’ll need to retrieve a session based on the session_id to continue where the user left off.

def get_session(session_id):

# Retrieve session data from Mem0 using session_id

session_data = client.get(session_id)

return session_data

# Example usage

session_data = get_session("abc123")

print(session_data)

Step 3: Handling Multiple Sessions Per User

A single user may have multiple sessions. You’ll need a way to manage different sessions (e.g., one for travel planning, one for restaurant recommendations). This requires keeping a list of session IDs for each user and allowing them to switch between sessions.

Storing Multiple Sessions per User

Store a list of active session IDs under each user to allow for multi-session handling.

def store_user_sessions(user_id, session_id):

# Retrieve existing user data or create new if user is new

user_data = client.get(user_id) or {"user_id": user_id, "sessions": []}

# Add the new session_id to the user's session list

user_data["sessions"].append(session_id)

# Update the user data in Mem0

client.put(user_id, user_data)

# Example usage: Associate the new session with the user

store_user_sessions(user_id, new_session_id)

Retrieving All Sessions for a User

Retrieve all session IDs linked to a user to manage multiple conversations.

def get_user_sessions(user_id):

user_data = client.get(user_id)

return user_data.get("sessions", [])

# Example usage: Retrieve all session IDs for a user

session_ids = get_user_sessions("12345")

print(f"User 12345 has the following sessions: {session_ids}")

Step 4: Switching Between Sessions

If a user has multiple sessions (e.g., travel and dining), allow them to switch between sessions by selecting a specific session ID.

Switching Sessions

def switch_user_session(user_id, target_session_id):

sessions = get_user_sessions(user_id)

if target_session_id in sessions:

# Retrieve the target session

session_data = get_session(target_session_id)

print(f"Switched to session: {target_session_id}")

return session_data

else:

return f"Session {target_session_id} not found for user {user_id}."

# Example usage: Switch to another session

switched_session = switch_user_session("12345", "def456")

Step 5: Managing Session State

Each session should have a state that indicates whether it's active, paused, or completed. This can help you manage session flow, allowing users to pause and resume sessions as needed.

Update Session State

def update_session_state(session_id, new_state):

session_data = get_session(session_id)

session_data['session_state'] = new_state

client.put(session_id, session_data)

# Example usage: Pausing a session

update_session_state("abc123", "paused")

Resuming Paused Sessions

When a user returns, you can check for paused sessions and offer to resume.

def check_paused_sessions(user_id):

sessions = get_user_sessions(user_id)

paused_sessions = []

for session_id in sessions:

session_data = get_session(session_id)

if session_data['session_state'] == 'paused':

paused_sessions.append(session_id)

return paused_sessions

# Example usage: Find all paused sessions for a user

paused_sessions = check_paused_sessions("12345")

print(f"Paused sessions: {paused_sessions}")

Step 6: Logging Conversation History

Tracking conversation history helps maintain context across sessions, especially if the user switches personas or preferences mid-session.

Logging Conversation Messages

def log_conversation(session_id, user_message, assistant_response):

session_data = get_session(session_id)

# Add the message to conversation history

session_data['conversation_history'].append({

"message": user_message,

"response": assistant_response

})

# Update the session in Mem0

client.put(session_id, session_data)

# Example usage: Logging a message

log_conversation("abc123", "Tell me more about luxury hotels", "Here are some top luxury options...")

Step 7: Error Handling and Robustness

Session Timeout

To avoid storing stale data, you can implement a timeout mechanism where sessions expire after a set period of inactivity. For this, you can store a timestamp and check the session’s age.

Session Expiry Example

import time

def check_session_timeout(session_id, timeout_limit=3600):

session_data = get_session(session_id)

session_age = time.time() - session_data.get('timestamp', time.time())

if session_age > timeout_limit:

update_session_state(session_id, "expired")

return f"Session {session_id} has expired."

return f"Session {session_id} is still active."

# Example usage: Check if a session has timed out

timeout_status = check_session_timeout("abc123")

print(timeout_status)

Step 8: Testing and Optimizing Multi-Session Handling

- Multiple Users: Simulate interactions from different users and test session creation, switching, and pausing.

- Session State Management: Test pausing and resuming sessions across multiple interactions.

- Error Scenarios: Simulate expired sessions and handle cases where users try to access completed or invalid sessions.

Would you like help with implementing specific parts of the multi-session flow, or should we explore how to optimize real-time session updates and tracking further?

Optimizing real-time session updates with Mem0 involves making the system responsive to user interactions, dynamically updating session data, and ensuring changes are reflected instantly during ongoing conversations. Let’s explore how to achieve this by focusing on immediate updates, efficient tracking, and synchronization of user sessions.

Step 1: Implement WebSocket or Polling for Real-Time Sync

Option 1: WebSocket Integration for Instant Updates

A WebSocket connection ensures that the AI Travel Assistant is aware of user changes in real-time. This allows the assistant to respond immediately when preferences or session states change.

- WebSocket Server: The server can listen for changes in user preferences or session state.

- Real-Time Update Trigger: Every time a user updates preferences (e.g., switching personas), the WebSocket sends an event to update the session stored in Mem0.

Example of WebSocket for Session Updates:

import websockets

import asyncio

async def session_update(websocket, path):

async for message in websocket:

# Process incoming update, e.g., user switched persona

session_data = json.loads(message)

session_id = session_data['session_id']

update_session_state(session_id, session_data['new_state'])

# Send confirmation back to the client

await websocket.send(f"Session {session_id} updated successfully.")

# Start WebSocket server

start_server = websockets.serve(session_update, "localhost", 8765)

asyncio.get_event_loop().run_until_complete(start_server)

asyncio.get_event_loop().run_forever()

- Every time the user updates their session, the client sends this information to the server, which updates Mem0 instantly.

- Use the WebSocket protocol to enable two-way communication, ensuring the assistant receives immediate feedback on user actions.

Option 2: Polling for Real-Time Session Updates

If WebSockets are not feasible, you can use a polling mechanism to regularly check Mem0 for changes in session data. Polling checks for updates at intervals (e.g., every few seconds) and triggers session synchronization.

Example of Polling:

import time

def poll_for_updates(session_id, interval=5):

while True:

session_data = get_session(session_id)

print(f"Polling session {session_id}: {session_data['session_state']}")

# Wait before polling again

time.sleep(interval)

# Example usage: Start polling for a specific session

poll_for_updates("abc123", interval=5)

This polling checks for real-time updates by retrieving session data from Mem0 periodically.

Step 2: Optimize Session Data Structure for Fast Access

Optimized Data Schema

To ensure fast retrieval and updates, structure the session data efficiently. Avoid deeply nested objects or unnecessary large data chunks in each session.

Example of Optimized Session Data:

{

"user_id": "12345",

"session_id": "abc123",

"persona": "Kelly",

"preferences": {

"hotel_type": "resort",

"interests": ["surfing", "sustainability"]

},

"conversation_history": [], // Keep minimal or batch older history

"session_state": "active",

"last_updated": 1694736000 // Unix timestamp for faster queries

}

last_updatedfield: Adding a timestamp makes it easier to track changes. You can skip unnecessary updates if the session hasn't changed since the last sync.conversation_historymanagement: Limit how much conversation history is stored in active sessions by batching older history to a separate store if necessary.

Step 3: Real-Time Updates for User Actions

Whenever a user makes changes (e.g., updating preferences or switching personas), Mem0 needs to be updated immediately to reflect the new state.

Function for Real-Time Session Update

You can modify the session in Mem0 in real-time based on user actions, ensuring any ongoing conversations reflect the latest preferences.

def update_session_in_real_time(session_id, field, new_value):

session_data = get_session(session_id)

# Update the specific field (e.g., persona or preferences)

session_data[field] = new_value

session_data['last_updated'] = int(time.time()) # Update the timestamp

# Store updated session back in Mem0

client.put(session_id, session_data)

# Example usage: Update persona in real-time

update_session_in_real_time("abc123", "persona", "James")

- This function updates the session in real time, ensuring any new user action is immediately stored.

- The

last_updatedfield allows you to track when the latest modification occurred, which is crucial for sync.

Step 4: Efficiently Track Changes with Event-Driven Updates

Implement an event-driven architecture where session state changes trigger specific actions. You can build a system that listens for key changes in the session data (e.g., preference updates) and updates relevant components immediately.

Event-Driven Logic Example:

def handle_event(session_id, event_type, event_data):

if event_type == "preference_update":

update_session_in_real_time(session_id, "preferences", event_data)

elif event_type == "persona_switch":

update_session_in_real_time(session_id, "persona", event_data)

# Example: Handle a user switching personas

handle_event("abc123", "persona_switch", "James")

- When the user switches personas, the assistant handles the event immediately by updating Mem0.

Step 5: Batch Updating for Conversation History

For actions that generate frequent changes (like conversation history updates), use batch updating to reduce the load on Mem0 and improve performance.

Batching History Updates:

Instead of writing to Mem0 after every message, you can batch conversation updates and write them in bulk at set intervals.

def batch_conversation_history(session_id, messages):

session_data = get_session(session_id)

# Add new messages to conversation history

session_data['conversation_history'].extend(messages)

# Store updated session in Mem0

client.put(session_id, session_data)

# Example usage: Batch multiple conversation updates

new_messages = [

{"message": "Looking for hotels", "response": "Here are some options..."},

{"message": "What about Michelin dining?", "response": "These restaurants are highly rated..."}

]

batch_conversation_history("abc123", new_messages)

Step 6: Real-Time User Feedback

To ensure users feel the system is responsive, display confirmation when their preferences are updated or sessions are paused/resumed. This can be achieved by sending immediate feedback after updating Mem0.

def send_feedback_after_update(session_id, field):

print(f"{field} updated successfully for session {session_id}.")

# Example usage: Send feedback after a session update

update_session_in_real_time("abc123", "persona", "James")

send_feedback_after_update("abc123", "persona")

This confirms that the session is successfully updated, improving the user experience.

Step 7: Testing and Optimizing Real-Time Performance

- Test WebSockets or Polling: If using WebSockets, ensure the connection remains stable and updates are received without delay. If using polling, fine-tune the polling interval to balance performance and responsiveness.

- Monitor Load: Keep track of how many updates your system processes in real time, particularly under high usage. Batch non-critical updates to reduce load.

- Check Data Integrity: After multiple real-time updates, ensure that session data remains consistent and accurate across Mem0.

Would you like to focus on implementing WebSockets or batch processing first, or should we dive deeper into how to handle potential conflicts in session updates during real-time interactions?

Let’s focus on implementing WebSockets first for real-time updates in your AI Travel Assistant. This will allow you to provide instant feedback to users and update their session data in Mem0 without delays.

Step 1: Setting Up a WebSocket Server

To implement WebSockets, we’ll set up a server that listens for updates from the AI Travel Assistant (e.g., user preference changes, persona switches) and sends those updates to Mem0 in real-time.

Install Required Libraries:

We’ll use Python's websockets library to create the WebSocket server. Install it via pip:

pip install websockets

Create the WebSocket Server:

The WebSocket server will listen for updates, process them, and update the corresponding session in Mem0.

import asyncio

import websockets

import json

import mem0

import time

# Initialize Mem0 client

client = mem0.Client(api_key="YOUR_MEM0_API_KEY")

# WebSocket handler to update session in real-time

async def session_update_handler(websocket, path):

async for message in websocket:

# Receive incoming message (e.g., user updates preferences or persona)

session_data = json.loads(message)

session_id = session_data['session_id']

field_to_update = session_data['field']

new_value = session_data['new_value']

# Retrieve the session and update it

session = client.get(session_id)

session[field_to_update] = new_value

session['last_updated'] = int(time.time()) # Update the timestamp

# Save the updated session back to Mem0

client.put(session_id, session)

# Send confirmation back to the client

await websocket.send(f"Session {session_id} updated successfully with {field_to_update}: {new_value}.")

# Start the WebSocket server

async def start_server():

server = await websockets.serve(session_update_handler, "localhost", 8765)

await server.wait_closed()

# Run the WebSocket server

asyncio.get_event_loop().run_until_complete(start_server())

asyncio.get_event_loop().run_forever()

Step 2: Running the WebSocket Server

After setting up the WebSocket server, you can run it by executing the Python script. The server will listen for updates on localhost port 8765 and process incoming messages.

To start the server:

python websocket_server.py

Step 3: Setting Up WebSocket Client for Real-Time Updates

On the client side (e.g., your AI Travel Assistant), you’ll need to establish a WebSocket connection and send updates when a user action occurs (such as switching personas or updating preferences).

WebSocket Client Example:

import asyncio

import websockets

import json

async def send_session_update(session_id, field, new_value):

# Connect to the WebSocket server

async with websockets.connect("ws://localhost:8765") as websocket:

# Create the message with session details

update_message = json.dumps({

"session_id": session_id,

"field": field,

"new_value": new_value

})

# Send the update to the server

await websocket.send(update_message)

# Wait for confirmation from the server

confirmation = await websocket.recv()

print(confirmation)

# Example usage: Sending a real-time update for persona change

session_id = "abc123"

field = "persona"

new_value = "James"

asyncio.get_event_loop().run_until_complete(send_session_update(session_id, field, new_value))

Step 4: Real-Time Session Update Workflow

- User Action: The user switches their persona or updates preferences in the AI Travel Assistant.

- Send Update: The client sends a WebSocket message to the server with the

session_id, field to update, and new value. - Server Updates Mem0: The server retrieves the session from Mem0, updates the session with the new data, and stores it back in Mem0.

- Confirmation: The WebSocket server sends confirmation back to the client that the session has been updated successfully.

Step 5: Batch Processing (Optional)

If your use case involves frequent updates (e.g., logging conversation history), you can batch updates to Mem0 rather than sending every single update immediately.

Batch Update Workflow:

Instead of updating Mem0 with every message, accumulate multiple changes and send them in a batch at set intervals (e.g., every 10 seconds).

Batch Conversation Logging Example:

import time

# Example batch structure

batch_updates = []

# Function to add updates to the batch

def add_to_batch(session_id, message, response):

batch_updates.append({

"session_id": session_id,

"message": message,

"response": response

})

# Function to process the batch and update Mem0

def process_batch():

global batch_updates

for update in batch_updates:

session_id = update["session_id"]

session_data = client.get(session_id)

# Add the message and response to conversation history

session_data["conversation_history"].append({

"message": update["message"],

"response": update["response"]

})

# Update Mem0

client.put(session_id, session_data)

# Clear the batch after processing

batch_updates = []

# Example usage: Log multiple messages in a batch

add_to_batch("abc123", "Tell me about eco-friendly resorts", "Here are some options...")

add_to_batch("abc123", "What about fine dining?", "These restaurants are highly rated...")

# Process the batch at intervals (e.g., every 10 seconds)

while True:

time.sleep(10)

process_batch()

Step 6: Testing the WebSocket and Batch Processing

- Test WebSocket Updates: Ensure that when a user action occurs (e.g., switching persona), the session in Mem0 is updated immediately, and the client receives confirmation.

- Test Batch Processing: If using batch processing for conversation history or frequent updates, verify that changes accumulate correctly and are written to Mem0 in bulk.

Would you like to proceed with testing the WebSocket updates, or should we refine the batch processing for high-frequency updates like conversation logs?

To refine batch processing for high-frequency updates like conversation logs, we’ll focus on ensuring that updates are efficient, minimize latency, and reduce the load on Mem0. Let’s dive into optimizing batch updates and managing large amounts of data.

Step 1: Organizing Batches Efficiently

Batch Data Structure

To optimize the handling of multiple conversation updates, we can organize the batch into a dictionary, keyed by session_id. This ensures that updates to the same session are grouped, and redundant data processing is avoided.

Example Batch Data Structure:

batch_updates = {

"abc123": {

"conversation_history": [

{"message": "Tell me about resorts", "response": "Here are some options..."},

{"message": "What about dining?", "response": "These are top restaurants..."}

]

},

"def456": {

"conversation_history": [

{"message": "Looking for boutique hotels", "response": "Check these hotels..."}

]

}

}

Update Function for Batches

You can create a function that consolidates all updates for each session before pushing them to Mem0. This reduces the number of times you hit the API and ensures that only essential updates are processed.

def add_to_batch(session_id, message, response):

if session_id not in batch_updates:

batch_updates[session_id] = {"conversation_history": []}

# Append the new message to the session's conversation history

batch_updates[session_id]["conversation_history"].append({

"message": message,

"response": response

})

# Example usage: Adding to batch

add_to_batch("abc123", "Tell me about resorts", "Here are some options...")

add_to_batch("abc123", "What about fine dining?", "Here are the top restaurants...")

add_to_batch("def456", "Looking for boutique hotels", "Check these hotels...")

Step 2: Processing and Storing Batches

When processing the batch, we’ll update Mem0 in bulk. For each session_id, we’ll retrieve the current session data, append the batched conversation history, and store the updated session.

Processing Function

The function processes each batch and pushes the updates to Mem0 efficiently.

def process_batch():

global batch_updates

for session_id, updates in batch_updates.items():

# Retrieve the current session data from Mem0

session_data = client.get(session_id)

# Add new conversation history to the session

session_data["conversation_history"].extend(updates["conversation_history"])

# Update the session in Mem0

client.put(session_id, session_data)

# Clear the batch after processing

batch_updates = {}

# Example: Process the batch after collecting updates

process_batch()

Step 3: Setting a Timer for Batch Processing

To ensure that conversation updates are processed regularly, use a timer or scheduler to trigger the process_batch function at set intervals (e.g., every 10 seconds or after a certain number of updates).

Batch Processing Timer

You can implement a timer using Python’s time.sleep() or a more sophisticated scheduler like APScheduler if your application requires more robust scheduling.

import time

def batch_timer(interval=10):

while True:

# Sleep for the specified interval before processing the batch

time.sleep(interval)

print("Processing batch...")

process_batch()

# Example usage: Process the batch every 10 seconds

batch_timer(interval=10)

Step 4: Handling High-Frequency Conversation Updates

For high-frequency updates, you’ll want to:

- Limit Batch Size: Set a threshold on how many updates are accumulated before processing the batch, ensuring it doesn't grow too large.

- Time-Based Processing: Trigger batch processing either after a set number of updates or based on time intervals, whichever comes first.

Example of Limiting Batch Size

You can limit the number of updates stored in the batch before processing:

BATCH_LIMIT = 5

def process_batch_if_full():

# Process the batch if it exceeds the limit

if sum(len(updates["conversation_history"]) for updates in batch_updates.values()) >= BATCH_LIMIT:

process_batch()

# Example: Check if batch size exceeds limit before processing

add_to_batch("abc123", "Tell me about resorts", "Here are some options...")

add_to_batch("abc123", "What about fine dining?", "Here are the top restaurants...")

process_batch_if_full() # Process if batch exceeds the limit

Step 5: Optimizing Data Storage in Mem0

To ensure efficient storage in Mem0:

- Archive Older Data: Move old conversation history (if it grows too large) to a separate archival storage or summarize it, reducing memory consumption.

- Optimize

conversation_historyField: Limit the size of each session’s conversation history to the most recent messages, keeping only what’s necessary for context.

Archiving Old History

You can offload older history to another location (e.g., external storage or a separate Mem0 record) once it exceeds a certain size.

def archive_old_history(session_data, max_history_length=100):

if len(session_data["conversation_history"]) > max_history_length:

# Archive older messages

archived_history = session_data["conversation_history"][:-max_history_length]

# Store archived history (this could be in another Mem0 key or external storage)

client.put(f"archive_{session_data['session_id']}", {"archived_history": archived_history})

# Keep only the most recent history

session_data["conversation_history"] = session_data["conversation_history"][-max_history_length:]

return session_data

# Example: Apply archiving logic during batch processing

def process_batch():

global batch_updates

for session_id, updates in batch_updates.items():

session_data = client.get(session_id)

# Append the new conversation history

session_data["conversation_history"].extend(updates["conversation_history"])

# Archive older messages if necessary

session_data = archive_old_history(session_data)

# Update the session in Mem0

client.put(session_id, session_data)

batch_updates = {}

Step 6: Error Handling and Robustness

Since conversation logs are critical, you’ll want to ensure the system handles potential failures gracefully.

Error Handling Example:

Handle connection issues or Mem0 write failures during batch processing.

def process_batch():

global batch_updates

for session_id, updates in batch_updates.items():

try:

session_data = client.get(session_id)

session_data["conversation_history"].extend(updates["conversation_history"])

# Archive old history if necessary

session_data = archive_old_history(session_data)

# Update the session in Mem0

client.put(session_id, session_data)

except Exception as e:

print(f"Error updating session {session_id}: {str(e)}")

# Clear the batch after processing

batch_updates = {}

Step 7: Testing and Monitoring

To ensure the batch processing is working smoothly:

- Simulate High Load: Test with a large volume of conversation logs to verify that batch processing handles them efficiently.

- Monitor Performance: Track the time it takes to process batches and identify any bottlenecks.

- Stress Test Archiving: Ensure the archiving mechanism works correctly, especially for sessions with extensive conversation history.

Would you like to run tests on the batch processing and archiving features, or focus next on monitoring performance under high-frequency updates?

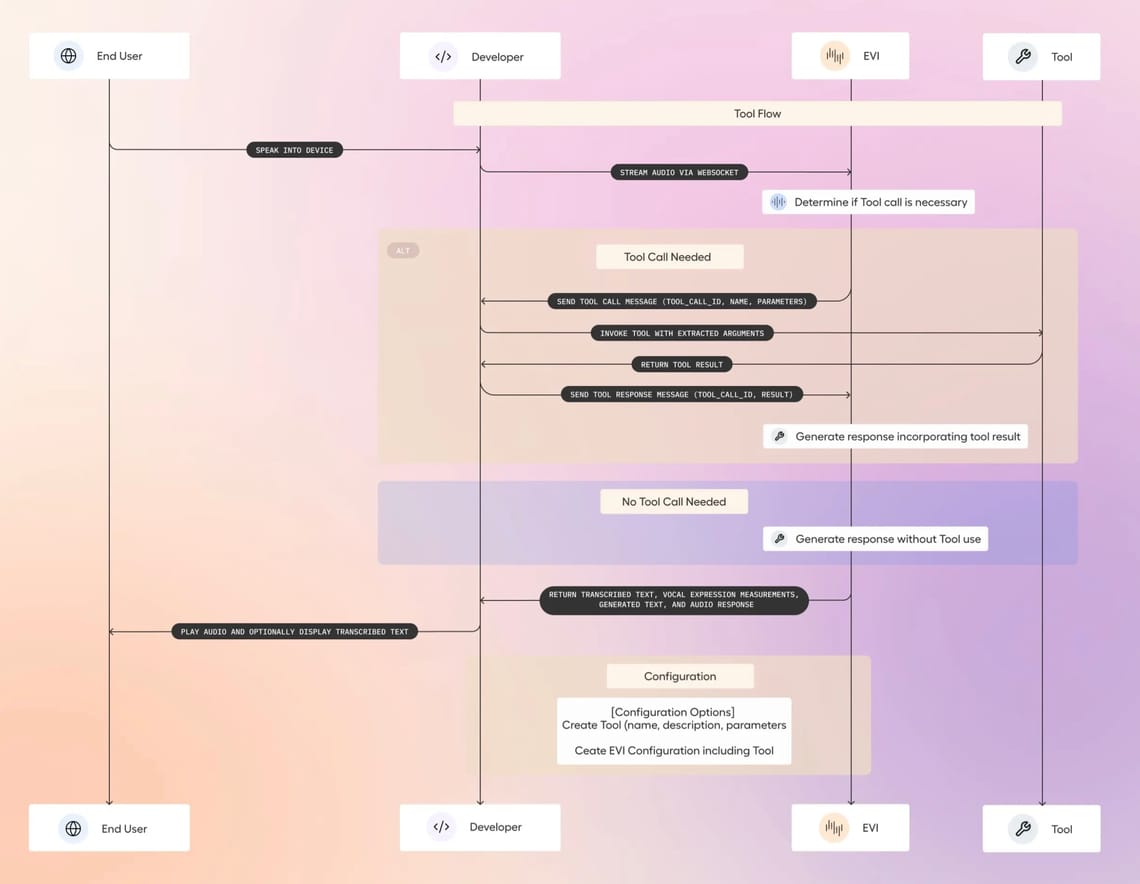

To set up a custom text socket for Hume's Empathic Voice Interface (EVI), follow these key steps:

Setup Process

- Initialize the Custom Text Socket:

- Use Hume's example repository for detailed instructions on setting up the custom text socket[1].

- This repository provides guidance on both the setup process and operational aspects of the code[1].

- Host the Socket:

- Utilize Ngrok to publicly serve your socket[1].

- This step is crucial for connecting to the Hume system[1].

- Configure Voice Settings:

- Create a voice configuration in the Hume platform[1].

- Specify "Custom language model" as the Language Model[1].

- Set your socket's WSS URL as the Custom Language Model URL[1].

- Make API Request:

- When making a request to the Hume platform, include the

config_idparameter[1]. - Set the

config_idvalue to the Voice configuration ID of your custom configuration[1].

- When making a request to the Hume platform, include the

Communication Protocol

- Receiving Data:

- Your socket will receive JSON payloads containing conversation thread history from Hume[1].

- Processing:

- Apply your custom business logic to the received data[1].

- Utilize your language model to generate appropriate responses based on the conversation history[1].

- Sending Responses:

- Transmit the generated text responses back to the Hume platform through the established socket connection[1].

Best Practices

- Text Transformation:

- Convert numerical values and abbreviations into their full verbal counterparts for improved clarity and naturalness[1].

- Custom Session ID:

- Implement a

custom_session_idin theSessionSettingsmessage for managing conversational state[1]. - Use this ID to maintain user state, pause/resume conversations, and persist conversations across sessions[1].

- Implement a

- WebSocket Handling:

- Utilize the WebSocket client provided by the Hume SDK for easier interaction with the EVI API[2].

- The WebSocket connection supports intellisense/autocomplete for available functions[2].

By following these steps, you can effectively set up and integrate a custom text socket with Hume's EVI, enabling advanced conversation steering, regulatory compliance, context-aware text generation, and real-time data access in your voice interface applications.

Citations:

[1] https://dev.hume.ai/docs/empathic-voice-interface-evi/custom-language-model

[2] https://pypi.org/project/hume/0.7.0rc2/

[3] https://www.hume.ai/blog/hume-custom-model-api

When configuring a custom language model for Hume's Empathic Voice Interface (EVI), there are several best practices to follow:

Setup and Configuration

- Socket Establishment:

- Set up a custom text socket using Hume's example repository for guidance[2].

- Use Ngrok to publicly serve your socket, ensuring it's accessible to the Hume system[2].

- Voice Configuration:

- In the Hume platform, create a voice configuration specifying "Custom language model" as the Language Model[2].

- Set your socket's WSS URL as the Custom Language Model URL[2].

- API Request:

- When making requests to the Hume platform, include the

config_idparameter with the Voice configuration ID of your custom setup[2].

- When making requests to the Hume platform, include the

Communication Protocol

- Data Handling:

- Implement robust logic to receive and process JSON payloads containing conversation thread history from Hume[2].

- Apply your custom business logic and language model to generate appropriate responses[2].

- Response Formatting:

- Transform numerical values and abbreviations into their full verbal counterparts for improved clarity (e.g., "3" to "three", "Dr." to "doctor")[2].

- WebSocket Management:

- Utilize the WebSocket client provided by the Hume SDK for easier interaction with the EVI API[4].

- Take advantage of the intellisense/autocomplete features for available functions on the WebSocket connection[4].

Session Management

- Custom Session ID:

- Implement a

custom_session_idin theSessionSettingsmessage for effective conversational state management[2]. - Use this ID to:

- Maintain user state on your backend

- Enable pause/resume functionality for conversations

- Persist conversations across sessions

- Match frontend and backend connections

- Implement a

Model Integration

- Leveraging Pre-trained Models:

- Utilize Hume's AI models pre-trained on millions of videos and audio files to enhance prediction accuracy[3].

- Rapid Learning:

- Design your custom model to learn quickly from a relatively small dataset, potentially achieving accurate predictions with just a few dozen examples[3].

- Multimodal Integration:

- Consider integrating language, voice, and facial movement data for more comprehensive predictions[3].

Data Preparation

- Dataset Quality:

- Ensure your training dataset closely resembles the files you intend to predict on in terms of length, number of speakers, and language[3].

- Be mindful of potential biases or imbalances in your dataset[3].

- Continuous Improvement:

- Regularly update and refine your dataset to improve model performance over time.

By adhering to these best practices, you can effectively configure and integrate your custom language model with Hume's EVI, enabling advanced conversation steering, regulatory compliance, context-aware text generation, and real-time data access in your voice interface applications.

Citations:

[1] https://www.youtube.com/watch?v=uOo7qTCleT4

[2] https://dev.hume.ai/docs/empathic-voice-interface-evi/custom-language-model